Columbia Student Suspended After Creating AI That Helps You Cheat Your Way to a Six-Figure Job!

In a rapidly evolving digital age, where artificial intelligence is reshaping nearly every facet of modern life, the line between innovation and ethical violation has become increasingly blurred. A recent case at Columbia University underscores this complex tension, as a 21-year-old student has been suspended for developing an AI-powered tool that helps individuals "cheat on everything" - including job interviews for lucrative six-figure positions.

The Rise of Cluely: AI That "Levels the Playing Field"

Chungin “Roy” Lee, a computer science student at Columbia, made headlines after announcing that his AI startup, Cluely, had secured $5.3 million in seed funding from well-known venture capital firms such as Abstract Ventures and Susa Ventures. Cluely, co-founded with COO Neel Shanmugam, began as a project called Coder - a browser extension designed to assist software engineers in cracking technical job interviews by providing real-time, invisible support during coding assessments.

In a now-viral thread posted on X (formerly Twitter), Lee shared how the tool evolved after he was suspended from Columbia University for its creation. "I post a video online, publicly of me using the tool the whole time to show everyone, 'Hey, look at this technology that I built that will literally let you get a six-figure job’," he explained.

The AI tool operates within a browser and discreetly assists users during interviews, specifically on platforms like LeetCode, which is widely used by major tech firms to screen software engineering candidates. While Lee insists that Cluely is just another form of productivity enhancement - akin to a calculator or spellchecker - critics argue that it crosses a clear ethical line by actively concealing assistance from interviewers.

The Amazon Internship That Sparked Controversy

The initial concept for Cluely - or Coder at the time - emerged from Lee’s personal frustrations with the job interview process. He considered platforms like LeetCode to be unnecessarily rigid and time-consuming. Using Coder, Lee managed to secure a highly competitive internship at Amazon, an achievement he openly attributed to the tool.

However, the celebration was short-lived.

Lee’s decision to publicly share a video of himself using Coder during the interview process caught Amazon’s attention - and not in a good way. According to Lee, the tech giant became "extremely upset" and contacted Columbia University directly. He claims that Amazon issued a warning: unless Columbia took disciplinary action, the company would cease recruiting from the Ivy League school altogether.

This led to a disciplinary hearing where Lee was ultimately suspended from Columbia. Expressing his disappointment, Lee commented: "Obviously, I'm upset because Columbia is supposed to be training the future generation of leaders. I thought as an Ivy League institution, anyone who's going to openly embrace their students using AI for various purposes that had nothing to do with the school, it'd be Columbia."

A Divisive Vision of the Future

Despite the backlash, Lee’s startup has continued to thrive. According to a statement he gave to TechCrunch, Cluely achieved over $3 million in average annual return within the first half of 2025 alone. That success has emboldened Lee to continue developing the platform, even as ethical and institutional criticisms mount.

In a bold promotional video for Cluely, Lee reenacts a fictionalized situation in which he lies to a date about his knowledge of art and his age, using AI assistance. The video, which some found humorous, sparked serious concerns online. Critics likened it to a real-life episode of Black Mirror, expressing fear that such tools could one day be integrated into augmented reality (AR) glasses, enabling users to manipulate their surroundings and interactions in real-time.

"Whadya know, maybe cheaters do prosper," the video ironically concludes - a tagline that both intrigues and disturbs viewers.

The Ethics of Digital Deception

Lee’s story has opened up a broader conversation about the ethics of AI, especially in contexts where authenticity and merit are core values. While tools like Cluely can be seen as innovative, many argue they undermine trust in systems designed to evaluate skill and character.

Several experts in education and technology have weighed in on the controversy:

- “The question isn’t whether AI will help us, but whether it will help us in a way that maintains fairness and accountability,” says Dr. Emily Zhou, an AI ethics researcher at Stanford University.

- Others are less charitable. “If your product’s main selling point is deception, then you’re not disrupting an industry - you’re eroding it,” wrote one critic on LinkedIn.

The fundamental concern is whether such tools create a false sense of competence, where individuals can land jobs based on answers provided by AI rather than their own knowledge or skills. In high-stakes fields like software engineering, where even minor coding errors can have massive implications, this becomes more than just an ethical grey area - it becomes a liability.

The Academic Fallout

Columbia University has declined to comment on the specifics of Lee’s case, citing student privacy laws. However, the school is reportedly reviewing its policies on AI usage in both academic and professional development contexts. Other institutions, including MIT and Stanford, have already begun drafting strict guidelines for AI-based tools in academic environments, emphasizing transparency and self-authorship.

The situation has also reignited debates around how universities should prepare students for an AI-driven future. Some argue that banning such tools outright is shortsighted. Instead, they propose integrating ethical AI education into curricula - teaching students how to leverage these tools responsibly rather than clandestinely.

Innovation or Infraction?

There’s no denying that Lee is a talented developer with entrepreneurial instincts. But the case of Cluely raises a key question for society: Should innovation be applauded regardless of its ethical implications?

For many, the answer remains unclear. On one hand, Cluely represents the cutting edge of AI-enabled productivity. On the other, it symbolizes a new era of digital deception, where authenticity is easily traded for expediency.

As companies increasingly rely on automated tools and standardized assessments to vet candidates, AI products like Cluely could either force a reckoning in hiring practices - or accelerate a breakdown in trust.

What Comes Next?

Lee has already shared his story on mainstream media platforms, including an appearance on Dr. Phil, where he discussed the suspension and the broader implications of Cluely’s success. Despite being out of school, he remains optimistic about the future. With growing venture capital support and rising user demand, Cluely may be on track to become a key player in the next wave of controversial tech startups.

Meanwhile, institutions like Columbia face a difficult challenge: adapting to a future where AI doesn’t just assist in learning and work - it changes the entire rules of engagement.

In the words of Lee himself: “If tools like calculators were once called cheating, maybe it’s time to rethink what cheating even means.”

Only time will tell whether Cluely becomes a cautionary tale or a blueprint for the next generation of digital disruptors.

News in the same category

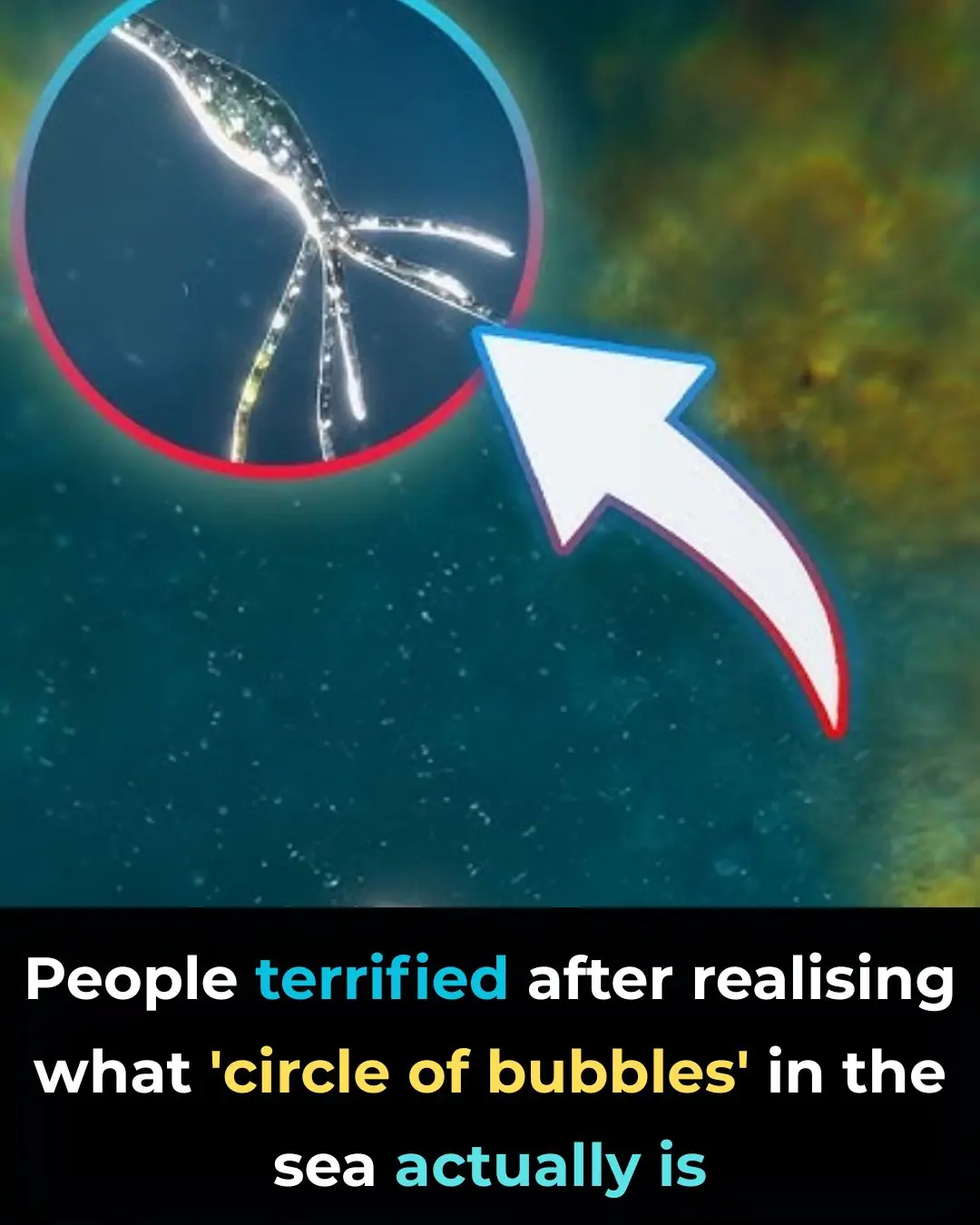

Terrifying Truth Behind Mysterious ‘Circle Of Bubbles’ In The Ocean Revealed

People Stunned After Learning The True Meaning Behind ‘SOS’ — It’s Not What You Think

After Spending 178 Days In Space, Astronaut Shares a ‘Lie’ He Realized After Seeing Earth

People Shocked To Learn What The Small Metal Bump Between Scissor Handles Is Actually For

Scientists Use AI And Ancient Linen To Reveal What Jesus May Have Truly Looked Like

Earth may witness a once-in-5,000-year event on the moon and it's coming sooner than you think

AI is willing to kill humans to avoid shutdown as chilling new report identifies 'malicious' behaviour

Horrifying simulation details exactly how cancer develops in the body

Elon Musk slammed for posting creepy video of 'most dangerous invention to ever exist'

World’s Rarest Blood Type Discovered—Only One Woman Has It

The woman with Gwada negative blood may not be famous, but her existence has already made an indelible mark on medical science.

100-foot ‘doomsday’ mega tsunami could obliterate US West Coast at any moment

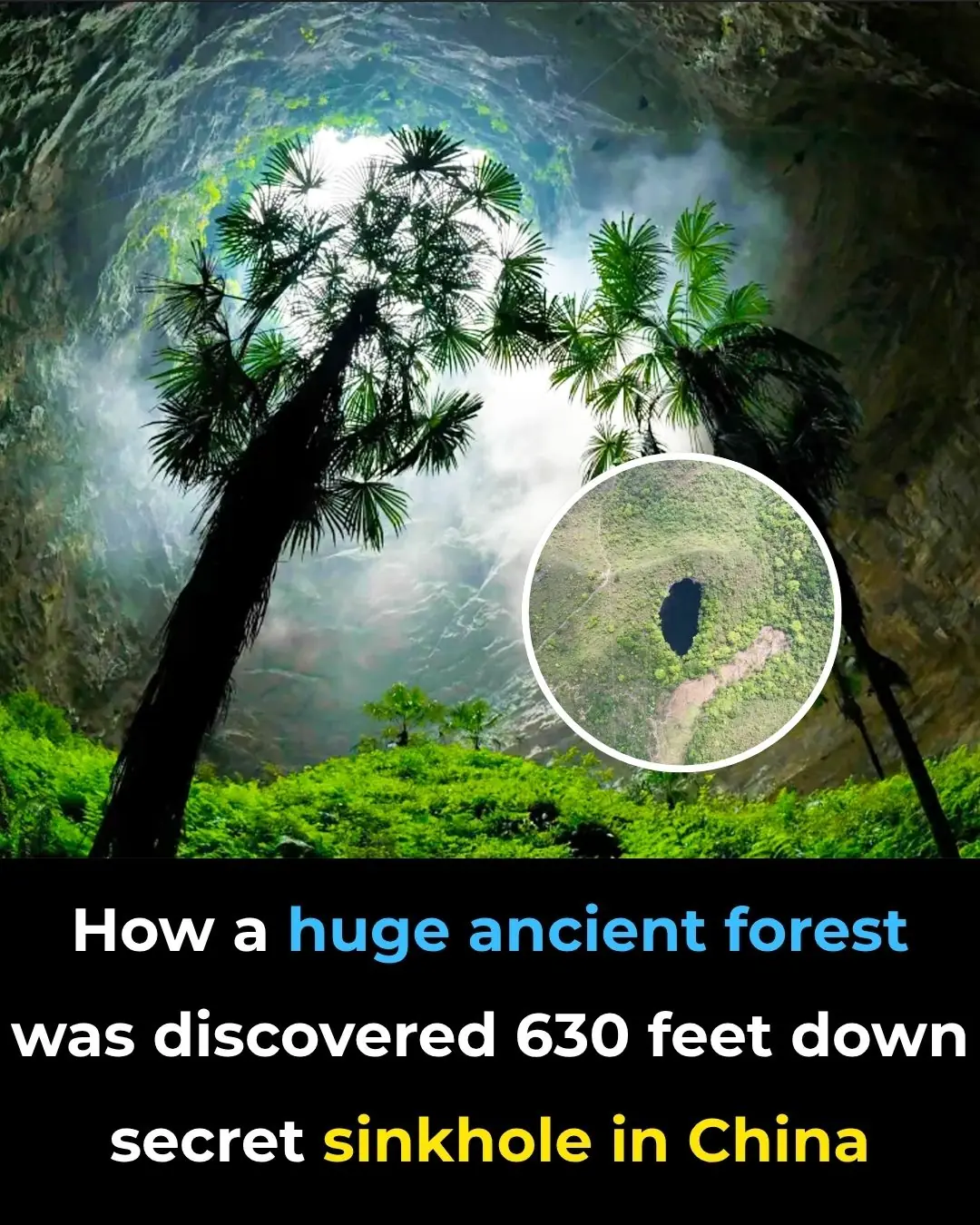

How a huge ancient forest was discovered 630 feet down secret sinkhole in China

100 Million Americans at Risk from Brain-Eating Parasite, Experts Warn

By understanding this parasite, improving detection, and emphasizing prevention, we can mitigate these risks

Woman who "died for 17 minutes" shares unimaginable reality of what she saw when her heart stopped beating

Victoria’s story transcends the ordinary—she experienced clinical death, returned with clarity, and then learned of a rare genetic disease that nearly killed her again. Yet today, she thrives.

Nasa Tracks Plane-Sized Asteroid Speeding Toward Earth At 47,000 Mph

Oscar Mayer Mansion Restored To Gilded Age Glory After $1.5M Renovation

Secret CIA Documents Declare That The Ark Of The Covenant Is Real, And Its Location Is Known

The Mystery Behind The Blood Falls In Antarctica

News Post

What Causes Those Strange Ripples In Your Jeans After Washing?

‘Healthy’ 38-year-old shares the only bowel cancer symptom he noticed — And it wasn’t blood in the loo

Young Dad Misses Key Cancer Symptom That Left Him Terrified

5 Things Doctors Say You Should Never Give Your Kids to Help Prevent Cancer

Terrifying Truth Behind Mysterious ‘Circle Of Bubbles’ In The Ocean Revealed

People Stunned After Learning The True Meaning Behind ‘SOS’ — It’s Not What You Think

After Spending 178 Days In Space, Astronaut Shares a ‘Lie’ He Realized After Seeing Earth

People Shocked To Learn What The Small Metal Bump Between Scissor Handles Is Actually For

Scientists Use AI And Ancient Linen To Reveal What Jesus May Have Truly Looked Like

Earth may witness a once-in-5,000-year event on the moon and it's coming sooner than you think

AI is willing to kill humans to avoid shutdown as chilling new report identifies 'malicious' behaviour

Horrifying simulation details exactly how cancer develops in the body

Elon Musk slammed for posting creepy video of 'most dangerous invention to ever exist'

Indiana Boy, 8, Dies Hours After Contracting Rare Brain Infection At School

World-First Gene Therapy Restores Sight to Boy Born Blind

World’s Rarest Blood Type Discovered—Only One Woman Has It

The woman with Gwada negative blood may not be famous, but her existence has already made an indelible mark on medical science.

A Warning About The ‘Worst Thing’ That People Should Never Do When Awakening in the Night

100-foot ‘doomsday’ mega tsunami could obliterate US West Coast at any moment